Imagine whispering just a few words into a machine — and watching an idea you’ve only pictured in your mind take form right in front of you.

A poem that’s never been written.

An image the world has never seen.

A melody that feels both familiar and new

Generative AI doesn’t merely copy what it knows; it recombines patterns, interprets nuance, and surprises us with creations we didn’t think possible from silicon and code.

In this article, we’ll peel back the layers to uncover why this technology can truly generate — and why the secret lies not in magic, but in the elegant machinery of language, learning, and imagination.

🏗️ Introduction: The Architect’s Lens

Generative AI isn’t just a technological leap — it’s a design revolution. To understand why it can generate, we must look beyond surface‑level capabilities and into the architectural soul of these systems.

This post breaks down the full stack — from input to imagination — through the eyes of a systems architect. In short: from tokens and embeddings to attention mechanisms and latent space composition.

To make the journey concrete, we’ll anchor our explanations to two examples:

Text‑only case [Example 1] : predicting the next word in a sentence like

“The curious cat climbed…”

to illustrate tokenization, embeddings, and autoregressive generation.

Multi‑modal case [Example 2] : creating an image of

“a German Shepherd wearing a red baseball cap”

to show how the same principles extend to vision, guiding pixels into place through cross‑attention and iterative refinement.

So let’s dive in.

But, if you’re new to this series, you might want to start with my previous post on –

"What is Generative AI?" [click to navigate]

where, I unpack the fundamentals of what generative AI is, how it differs from traditional AI, and why it’s capturing so much attention. That foundation will make this deep dive into the “how” even more rewarding.

🔍 The Core Concepts Behind Generative AI

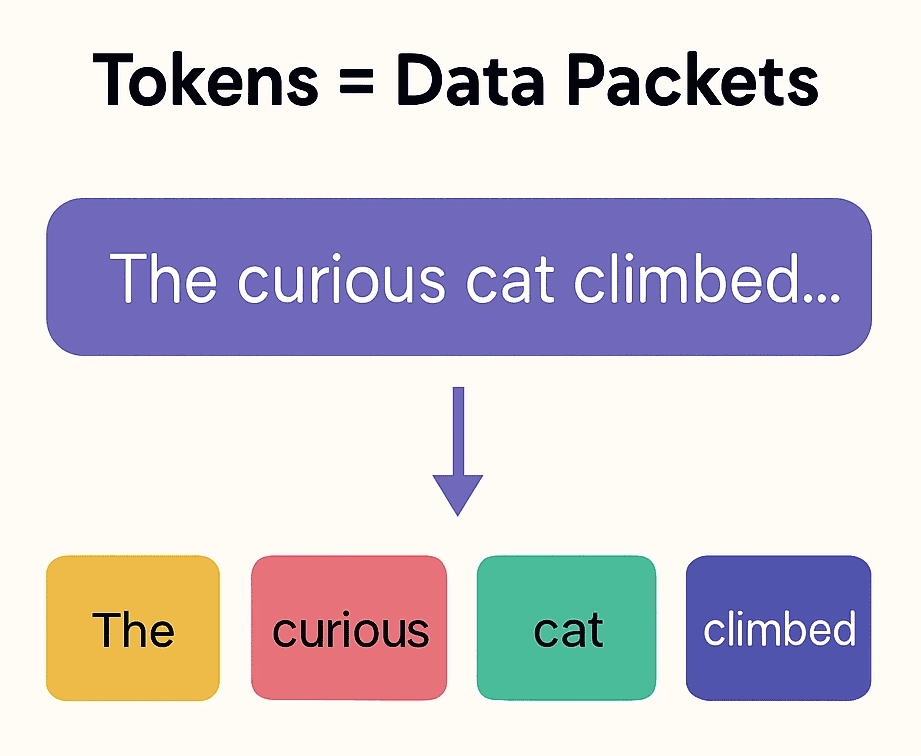

1. Tokens = Data Packets

What they are: The smallest units of meaning a model processes — words, subwords, or characters for text; pixels or patches for images.

Example 1: “The curious cat climbed…” . Tokens→ [“The“, “curious“, “cat“, “climbed“]

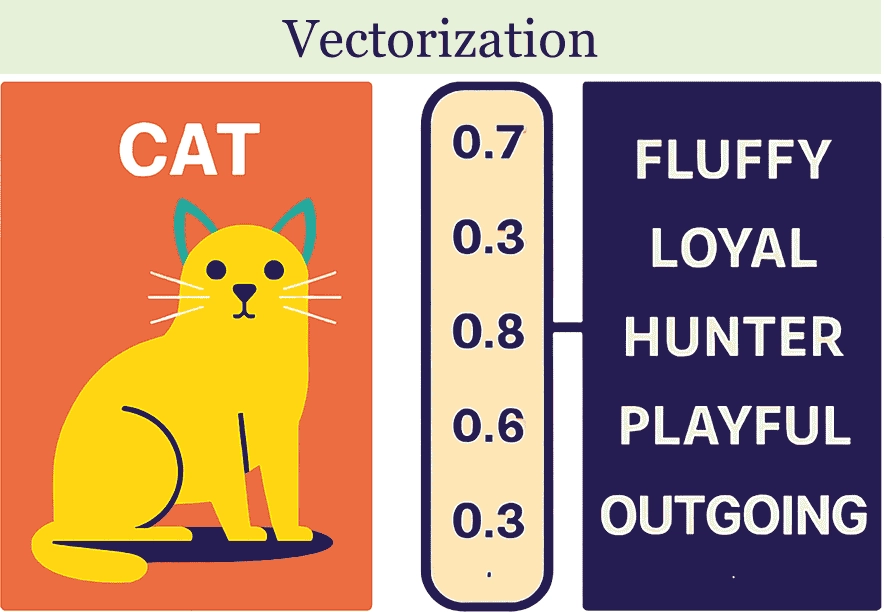

2. Embeddings — Turning Tokens into Meaning

What they are: Numerical vectors that capture the meaning and relationships of tokens.

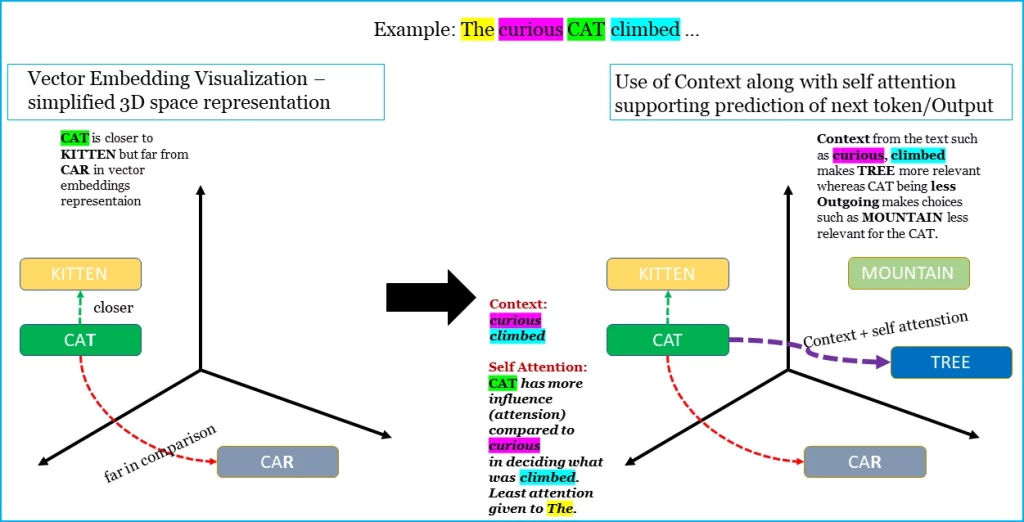

Example 1: Instead of treating “cat” and “car” as just letters, embeddings place them in a mathematical space where similar meanings are closer together — like giving each word a GPS coordinate in a world of meaning.

- “cat” is close to “kitten” and far from “car” in embedding space.

3. Context Window — The Model’s Active Memory

What it is: The span of tokens the model can consider at once.

Example 1: A large enough window let’s the model remember that the “cat” was “curious,” influencing the choice of “tree” over “sofa” and features such as less outgoing makes “mountain” irrelevant.

4. Attention Mechanisms — Deciding What Matters

What they are: Algorithms that determine how much each token should influence others.

Example 1: When predicting the next word after “climbed,” “cat” gets more weight than “curious.”

A simplified process for our example can be visualized like below.

5. Latent Space — The Model’s Internal Design Space

What it is: A compressed, abstract space where learned patterns are stored and combined.

Example 1: “Curious cat climbing a tree” exists as a concept cluster, enabling variations like “playful kitten scaling a branch.”

6. Autoregressive Generation — Predicting What Comes Next

What it is: Generating one token at a time, feeding each output back as input for the next step.

Example 1: After predicting “tree,” the model predicts the next word — maybe “in” — until the sentence is complete.

Now that we know core concepts of generation, let’s understand why transformers became the breakthrough architecture.

🚀 Why the Transformer Was a Breakthrough

Before Transformers (Attention Is All You Need, 2017), models like RNNs and LSTMs had two big limitations:

- Sequential bottleneck — processing tokens one at a time slowed training and blocked parallelization.

- Short memory — long‑range dependencies faded over time.

The Transformer solved both by:

- Replacing recurrence with self‑attention — allowing the model to look at all tokens at once.

- Enabling massive parallel computation — training on huge datasets quickly.

- Scaling cleanly — modular design let’s you stack more layers and heads without redesign.

This leap took us from small, task‑specific models to today’s giant, general‑purpose generative models.

🏗 Understanding The Transformer Architecture and architecting Elements

| Element | Role in the System | Why It Matters |

| Input Embeddings | Convert discrete tokens into dense vectors | Creates a uniform, computable representation for any modality |

| Positional Encoding | Injects sequence or spatial order into embeddings | Self‑attention alone is order‑agnostic; this preserves structure |

| Multi‑Head Self‑Attention | Computes weighted relationships between all tokens in parallel | Captures both local and global dependencies without sequential steps |

| Feed‑Forward Networks | Apply non‑linear transformations to each token’s representation | Refines and enriches token features after context integration |

| Residual Connections | Pass original input forward alongside transformed output | Prevents information loss and stabilizes deep networks |

| Layer Normalization | Keeps activations stable and training efficient | Improves convergence and robustness |

| Output Projection | Maps final hidden states back to token probabilities or pixel values | Produces the actual generated output |

Now that we’ve unpacked the Transformer’s inner workings, it’s worth looking at how this architecture scales into the Large Language Models (LLMs) you’ve probably heard about — like GPT.

📈 Scaling Up — Large Language Models (LLMs) and GPT

Large Language Models like GPT are Transformer architectures scaled to massive proportions — billions or even trillions of parameters — trained on vast corpora of text (and, in multi‑modal cases, images, audio, or code).

The “large” refers to both model size and training data breadth. This scale allows them to:

- Capture subtle patterns in language

- Reason over long contexts

- Generalize to tasks they weren’t explicitly trained for

With fine‑tuning, their output can be adapted for specific tasks, tones, or safety guidelines.

🌀 Putting It All Together — From Concept to Creation

We’ve explored the core concepts. We’ve dissected the Transformer’s architecture. Now, let’s watch the system in motion — data packets flowing, context and meaning weaving together, and outputs emerging step-by-step.

Example 1 — Text‑Only: “The curious cat climbed…”

- Tokenization — Split into tokens: [“The”, “curious”, “cat”, “climbed”].

- Embedding — Map each token into a high‑dimensional vector.

- Context Window — Load all tokens into active memory.

- Self‑Attention — “Cat” gets more weight than “curious” for predicting what was climbed.

- Feed‑Forward Refinement — Enrich each token’s representation with context.

- Output Projection — Predict the next token: “tree” is most likely.

- Autoregression — Append “tree” and repeat until the sentence is complete.

Example 2 — Multi‑Modal: “A German Shepherd wearing a red baseball cap”

Step-by-Step Breakdown of Image Generation from Text.

- Tokenization: The input prompt is broken into tokens: [“A“, “German“, “Shepherd“, “wearing“, “a“, “red“, “baseball“, “cap“]

- Embedding: Each token ID is converted into a vector embedding—a high-dimensional representation that captures meaning and relationships.

- Positional Encoding: Since Transformers don’t inherently understand order, positional encodings are added to embeddings to preserve the sequence:

- “German” comes before “Shepherd”

- “Red” modifies “baseball cap”

- Transformer Layers (Self-Attention): The model uses multi-head self-attention to understand how tokens relate:

- “German” + “Shepherd” = breed

- “Red” + “baseball cap” = accessory

- “Wearing” connects the dog to the cap

- Cross-Attention (in image models): In image generation models like DALL·E or Stable Diffusion, the text embeddings are passed to a decoder that uses cross-attention to guide image generation:

- The model learns that the dog should be a German Shepherd

- The cap should be red and baseball-style

- The cap should be placed on the dog’s head

- Output Projection: The final hidden states are projected into pixel space or latent image space, producing the visual output. In diffusion models, this starts with random noise/ Coarse grid patches that’s refined step-by-step, guided by the text prompt. The image gradually takes shape — blending concepts like “German Shepherd” and “red baseball cap” — with increasing detail, texture, and lighting until a coherent, high-resolution image emerges.

Closing Reflection — Architecture as Imagination Engine

From the smallest token to the vast expanse of latent space, from the precision of attention to the scale of LLMs, we’ve seen how each layer of design contributes to the act of generation.

Generative AI is not magic — it’s the outcome of deliberate architectural choices, scaled to extraordinary proportions. The Transformer didn’t just make models faster; it gave them the ability to hold context, connect ideas across distance, and compose something entirely new in real time.

Whether it’s predicting the next word in a sentence or painting pixels into a scene that has never existed before, the process is the same: a system built to understand, imagine, and create.

And that’s the real marvel — not that machines can generate, but that we can design them to do so with such elegance. The blueprint is here. The possibilities are still unfolding.

Up Next in the Series

We’ve explored what generative AI is, why it can generate, and the architecture that makes it possible. But there’s another crucial question: how do these models actually learn? In the next part of this series, we’ll step inside the training pipeline — from the oceans of raw data they consume, to the fine‑tuning and alignment that shape their personality and performance. If the Transformer is the blueprint, the training process is the construction crew that brings it to life.